IDCA News

All IDCA News

10 Aug 2022

OpenAI's GPT-3 the Next Generation Philosopher

OpenAI recently published GPT-3, an AI capable of writing persuasive philosophy papers in the style of esteemed thinkers such as Plato and Descartes. This has led to much conversation about whether this software constitutes the next great philosopher or AI, and if it can be considered truly intelligent.

The algorithm is rated as one of the latest inventions in the AI space to get the public's attention. It has been programmed to have dialogues with humans, and in some cases, it has convinced them that it's human too.

OpenAI recently published GPT-3, an AI capable of writing persuasive philosophy papers in the style of esteemed thinkers such as Plato and Descartes. This has led to much conversation about whether this software constitutes the next great philosopher or AI, and if it can be considered truly intelligent.

The well-known GPT-3 is a powerful auto-regressive language model that uses deep learning to produce text as humans do. This means that it can not only understand and respond to natural language but also generate original thoughts on various topics.

Eric Schwitzgebel, Anna Strasser, and Matthew Crosby sought to discover whether GPT-3 can be just as insightful as a human philosopher. They found that while GPT-3 may have used some logical fallacies and arguments based on personal beliefs, it generated many persuasive arguments. In conclusion, they found no significant difference between GPT -3's argumentative abilities and those of a human philosopher.

This question stumped experts:

"Could we ever build a robot that has beliefs? What would it take? Is there an important difference between entities, like a chess-playing machine, to whom we can ascribe beliefs and desires as convenient fictions and human beings who appear to have beliefs and desires in some more substantial sense?"

After fine-tuning GPT-3 using Daniel Dennet's corpus, ten philosophical questions were presented to both Dennet in real life and GPT-3 to see if the AI could compare with its human counterpart.

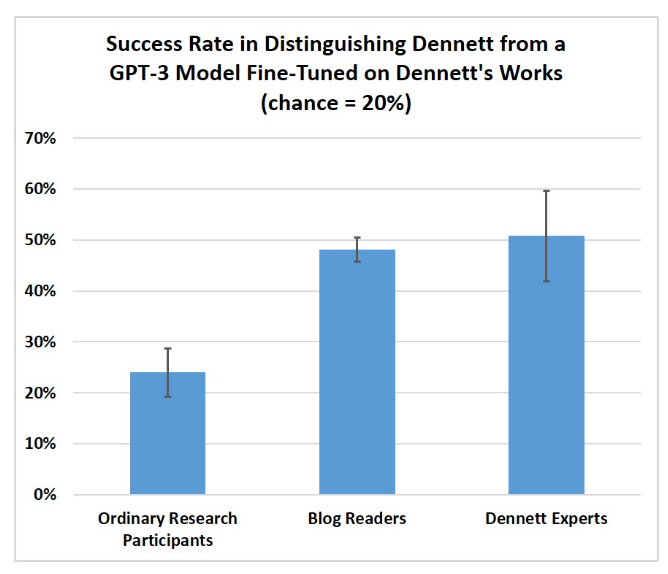

A panel of 25 philosophers, 98 participants in online research, and 302 readers of The Splintered Mind blog were asked to distinguish GPT -3's answers from Dennett's. The results were announced earlier this week. Philosophical experts familiar with Dennett's work performed the best.

On average, blog readers guessed 4.8 out of 10 correctly, far outpacing the experts. It's worth noting, however, that most readers have graduate degrees in philosophy, and 64% have read more than 100 pages of Dennett's work.

At the close of the experiment, the research participants performed a bit above average, with a performance of 1.2 out of 5 questions identified correctly.

"We might be approaching a future in which machine outputs are sufficiently human-like that ordinary people start to attribute real sentience to machines," Said Schwitzgebel.

Follow us on social media: